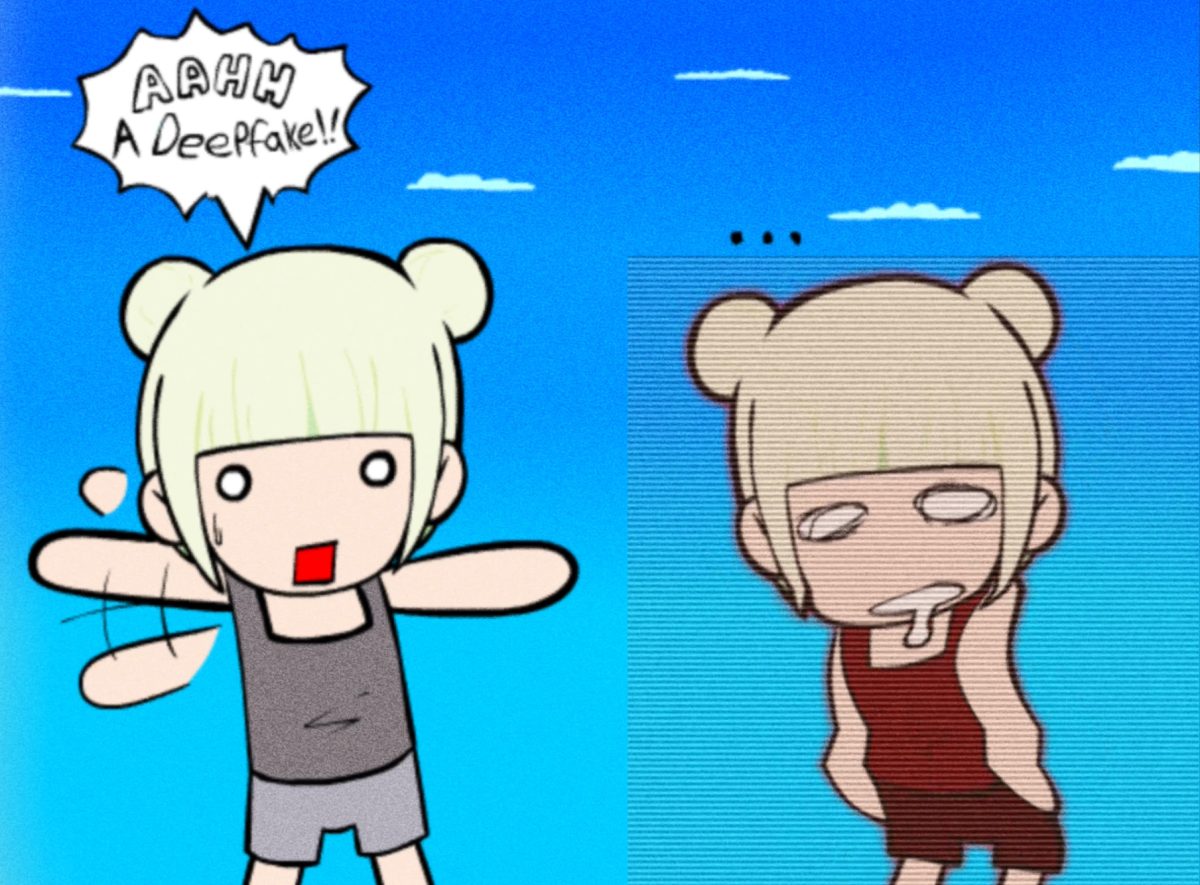

Deepfake audio technology is becoming highly advanced, providing an easier way for scammers to fake voices with intimidating accuracy. For instance, scammers can be family members in a troublesome situation and resort to acting like bank officials.

These scams take advantage of trust and emotions. 31 percent of Americans have gotten a deep fake scam call in 2023, with an average loss of $539. Since these scams are becoming a bigger problem, it is important to know how they work and how to protect ourselves.

The real impact of these scams is clear in what happened to Nicholas Hornstein Paulino’s cousin, Diego, who is twenty years old. He got a call from someone pretending to be their aunt, saying she was in trouble and needed money.

Diego was about to send the money when, luckily, the real aunt walked in from work. She saw him looking stressed and confused, and they realized he had almost been scammed. If she had not come home just then, Diego said he “totally would have fallen for it because the voice sounded so real.”

His family was shocked and decided to be more careful by texting each other to confirm identities. However, they also admitted that in a moment of panic, people do not always think clearly.

Additionally, businesses are not safe. 49 percent of businesses have faced deep fake fraud, losing an average of $450,000. Cyber fraud losses hit $12.5 billion in 2023, a 22 percent jump from the year before. If people are unaware of the risks, then they are more likely to get scammed.

Scammers use different tricks to fool people. Some try emotional manipulation like fake distress calls to make victims panic, while others use high-pressure tactics to get people to send money or share personal info. Sometimes all these tactics get combined into one, making them even harder to spot.

A study from the University of Florida found that while people can recognize deep fake audio 73% percent of the time. However, many still fall for it. One big reason is overconfidence.

Many think they can tell when a voice is fake, but AI makes speech sound super real. Scammers also take advantage of emotions like fear and trust in familiar voices. On top of that, AI can use real voice recordings to create new speech that sounds almost identical to the real thing.

Even though deep fake scams are getting more advanced, there are ways to stay safe. For instance, always verify identity, do not trust a voice alone and call back using a number you know is real, or have a family safe word can help confirm identities in emergencies.

Be skeptical, especially if someone is pushing for money or personal info. Tech tools such as AI-powered scam blockers, like Hiya’s AI Phone app, can help catch fake voices. Additionally, keeping personal info off social media can make it harder for scammers to sound convincing.

Since these scams are becoming more common, experts are working on better ways to detect them. AI-powered fraud detection, voice authentication security, and stronger laws are all being developed to fight back. However, the best defense is awareness. Deep fake scams are not just a tech problem. They mess with trust. As AI voice cloning gets better, it is important to stay informed and cautious.