According to the Center for Democracy and Technology, 70% of students in the United States used generative artificial intelligence for academic or personal purposes last year.

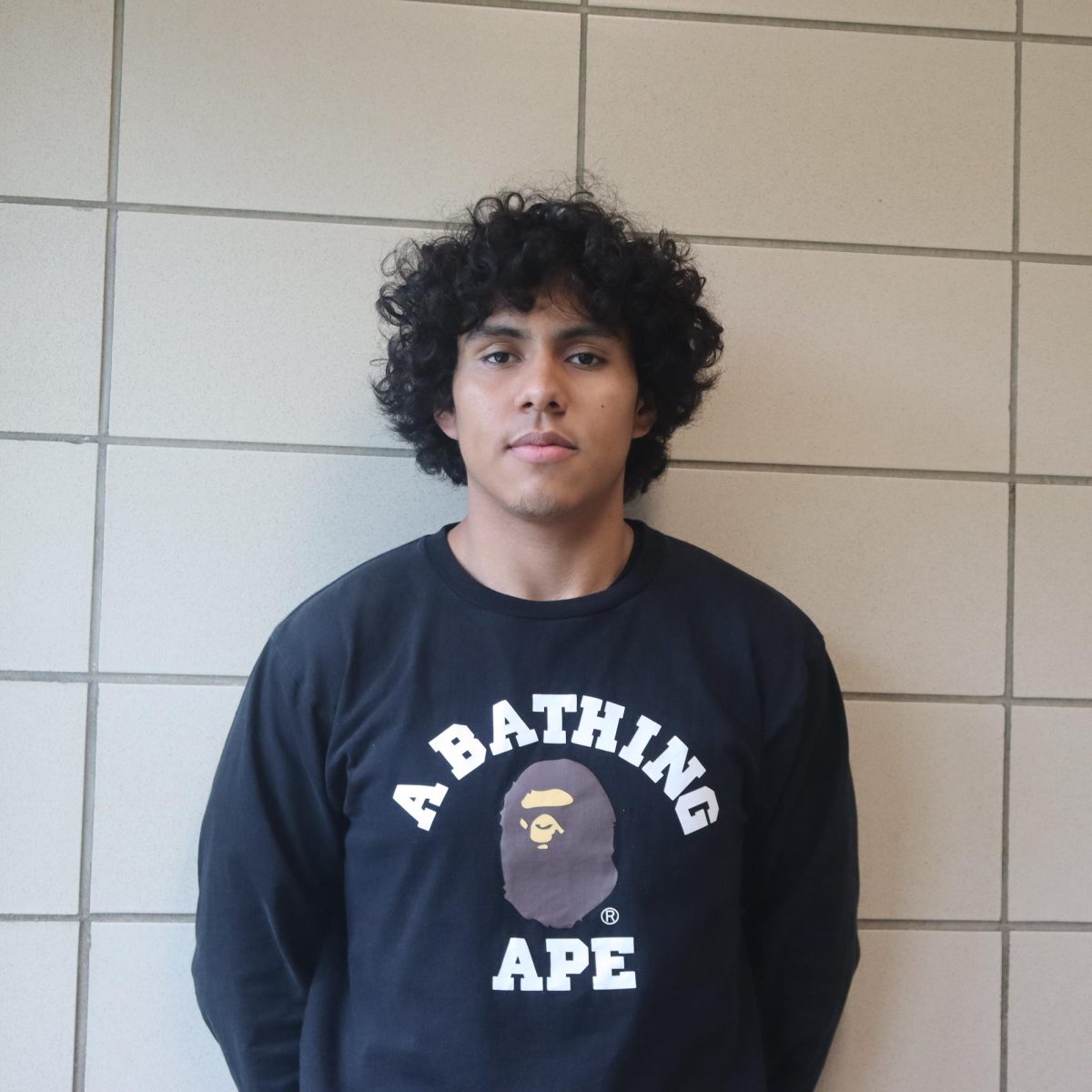

“(ChatGPT) helps me do my tasks faster and more efficiently compared to using Siri,” said Daniel, a senior at Pace High School whose name has been changed for privacy concerns.

The Center for Democracy and Technology defines generative AI as systems that “use machine learning to produce new content (e.g., text or images) based on large amounts of data that already exist. Generative AI is trained on enormous amounts of text and information for systems that will produce text responses or hundreds of millions of images for systems that will produce new images in response to prompts.”

The rise of AI detection tools in schools has sparked a debate about the role of artificial intelligence in education. While students use AI like ChatGPT for assignments, teachers are concerned about misuse and the accuracy of the detection tools.

Daniel said they use ChatGPT, an AI chatbot that answers questions based on data from the internet, for school projects because of its ability to deliver more accurate sourcing on recent news or events. They pay $20 a month for the premium version to gain access to more reliable and up-to-date information.

“It’s the equivalent of looking something up online,” they said. “I’d tell it to write me an outline of what and how the essay should be structured, then I use my own brain to figure out what words I’m gonna use.”

For this student, AI is simply another resource that may be the future of education.

“The way we learn hasn’t changed much over time, so inevitably something was gonna change,” Daniel said.

However, not everyone shares this view. Based to the Center for Democracy and Technology, 89% of families prefer to be consulted if their school considers utilizing AI to make choices regarding the ways students learn or education opportunities. When provided with a list of approaches on how AI could be utilized in schools, the vast majority of parents would prefer to take their children out of any choices made by AI or automated algorithms. For example, 43% of guardians requested that their child be taken out of applying AI to determine whether a student has been cheating or plagiarizing schoolwork. Furthermore, 57% of parents requested their children to be taken out of relying on AI to evaluate the right punishments, such as suspension or expulsion.

However, Daniel does not agree with individuals who use ChatGPT to simply copy and paste content. They argued that this approach is easily detectable and misses the point of the tool.

“It’s the equivalent of looking something up online,” they said. “I’d tell it to write me an outline of what and how the essay should be structured, then I use my own brain to figure out what words I’m gonna use.”

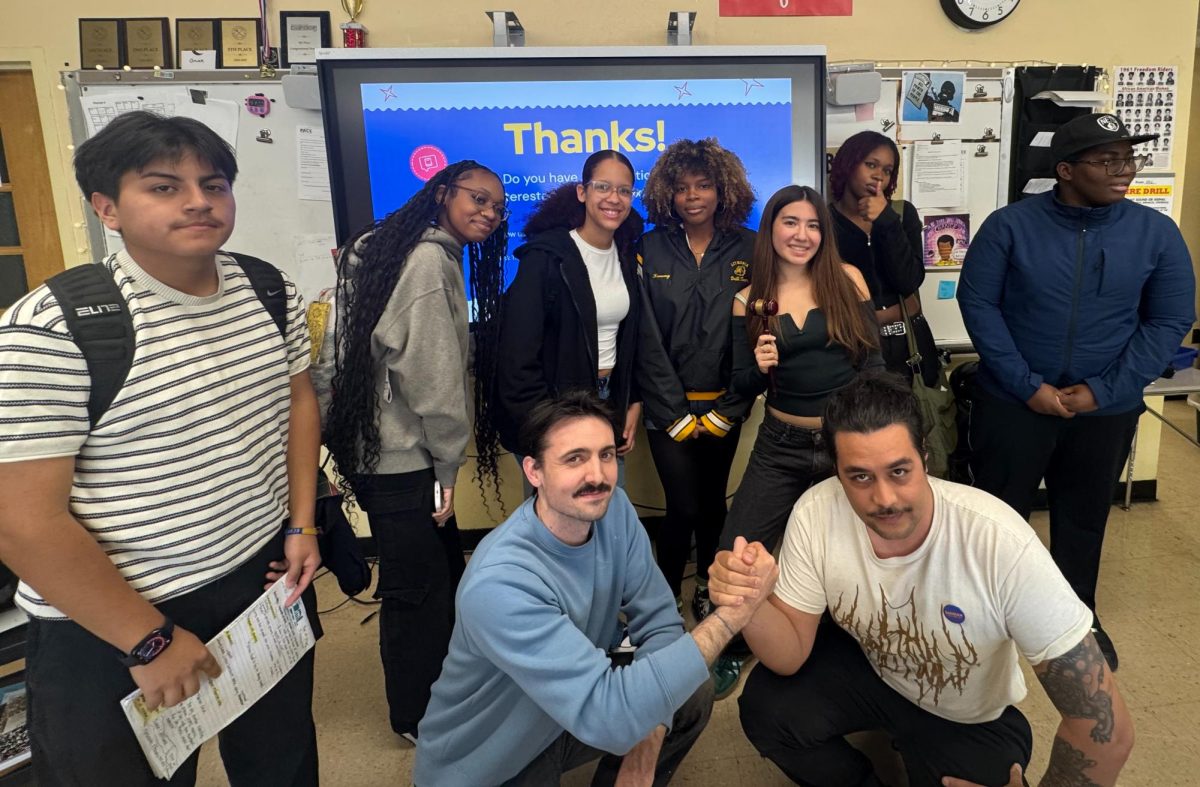

Honora Collins, the 12th-grade civics teacher, has seen firsthand how students are starting to use AI for their assignments and figured out ways to detect it herself without detection tools. Collins describes ChatGPT’s writing as “crappy” and calls it “predictive text,” arguing that it fails to showcase a student’s unique ideas or voice. For Ms. Collins, AI-generated writing simply doesn’t measure up to the depth of thought or personal perspective that students are capable of expressing.

“Frankly, when AI gets better than the student’s writing, then I have to figure out something else to do,” Ms. Collins said.

When asked if she encourages the use of AI, Ms. Collins noted that she views AI as an editing tool, but not to generate writing. She acknowledges the potential benefits of AI, like using it to check spelling and grammar, but she’s clear that heavily relying on AI for unoriginal content isn’t ideal, because “it’s not that good,” she said. “It’s more like a poor choice that a student even decided to use AI rather than their own brain.”

Daniel is concerned about the way current AI detection tools function.

“The way checkers work right now is by checking for patterns,” the anonymous student explained, adding that this pattern-based approach often leads to inaccuracies, accusations, and bad grades. “I’ve had work that I’ve done myself, and it shows to be 90% AI, but I know I didn’t use it.”

To address this issue, the anonymous student suggested that teachers should not solely rely on detection tools but should also consider comparing the flagged work to previously done assignments.

Ms. Collins said she also finds AI detection tools unreliable. According to her, AI-generated work is easy to identify just by reading it because “student writing is usually stronger than what AI can do.” Instead of relying on detection websites, Ms. Collins believes teachers should focus on alternative methods of identifying misuse of AI.

“You also try to assign stuff that AI can’t do yet,” she explains. This method, she argues, is a much more effective way to prevent students from simply copy-and-pasting from ChatGPT. Ms. Collins wants kids to work on developing skills with their brains, rather than letting a computer do the thinking for them.

Both Ms. Collins and Daniel agree on the potential benefits of AI in the classroom, recognizing how AI can be a valuable resource when used correctly in schools.

“There are a lot of ways in which AI can be beneficial to teaching and learning,” Ms. Collins acknowledges, emphasizing that its potential shouldn’t be dismissed entirely.

However, she expresses her frustration with the lack of higher authority regulating AI use in education.

“I wish they’d step in and regulate this so that it’s helpful to students and not harmful,” she says, highlighting the need for more oversight in how AI is used at Pace.

Reported with instructional assistance of freelance journalist Arabella Saunders.